Introduction to Congestion Management and Congestion Avoidance

Solutions

A solution to traffic congestion is a must on every network. A balance between limited network resources and user requirements is required so that user requirements are satisfied and network resources are fully used.

Congestion management and avoidance are commonly used to relieve traffic congestion.

- Congestion management provides means to manage and control traffic when traffic congestion occurs. Packets sent from one interface are placed into multiple queues that are marked with different priorities. The packets are sent based on the priorities. Different queue scheduling mechanisms are designed for different situations and lead to different results.

- Congestion avoidance is a flow control technique used to relieve network overload. By monitoring the usage of network resources in queues or memory buffer, a device automatically drops packets when congestion tends to worsen.

Port Queue Scheduling

You can configure SP scheduling or weight-based scheduling for eight queues on each interface of a NetEngine 8000 F. Eight queues can be classified into three groups, priority queuing (PQ) queues, WFQ queues, and low priority queuing (LPQ) queues, based on scheduling algorithms.

-

SP scheduling applies to PQ queues. Packets in high-priority queues are scheduled preferentially. Therefore, services that are sensitive to delays (such as VoIP) can be configured with high priorities.

In PQ queues, however, if the bandwidth of high-priority packets is not restricted, low-priority packets cannot obtain bandwidth and are starved out.

Configuring eight queues on an interface to be PQ queues is allowed but not recommended. Generally, services that are sensitive to delays are put into PQ queues.

-

Weight-based scheduling, such as WRR, WDRR, and WFQ, applies to WFQ queues.

-

LPQ queue is implemented on a high-speed interface (such as an Ethernet interface).

SP scheduling applies to LPQ queues. The difference is that when congestion occurs, the PQ queue can preempt the bandwidth of the WFQ queue whereas the LPQ queue cannot. After packets in the PQ and WFQ queues are all scheduled, the remaining bandwidth can be assigned to packets in the LPQ queue.

In the actual application, best effort (BE) flows can be put into the LPQ queue. When the network is overloaded, BE flows can be limited so that other services can be processed preferentially.

WFQ, PQ, and LPQ can be used separately or jointly for eight queues on an interface.

Congestion avoidance is a flow control technique used to relieve network overload. By monitoring the usage of network resources for queues or memory buffers, a device automatically drops packets when congestion tends to worsen.

Huawei routers support two drop policies:

- Tail drop

- Weighted Random Early Detection (WRED)

Tail Drop

Tail drop is the traditional congestion avoidance mechanism used to drop all newly arrived packets when congestion occurs.

Tail Drop causes TCP global synchronization. If TCP detects packet loss, TCP enters the slow-start state. Then TCP probes the network by sending packets at a lower rate, which speeds up until packet loss is detected again. In Tail drop mechanisms, all newly arrived packets are dropped when congestion occurs, causing all TCP sessions to simultaneously enter the slow start state and the packet transmission to slow down. Then all TCP sessions restart their transmission at roughly the same time and then congestion occurs again, causing another burst of packet drops, and all TCP sessions enters the slow start state again. The behavior cycles constantly, severely reducing the network resource usage.

WRED

WRED is a congestion avoidance mechanism used to drop packets before the queue overflows. WRED resolves TCP global synchronization by randomly dropping packets to prevent a burst of TCP retransmission. If a TCP connection reduces the transmission rate when packet loss occurs, other TCP connections still keep a high rate for sending packets. The WRED mechanism improves the bandwidth resource usage.

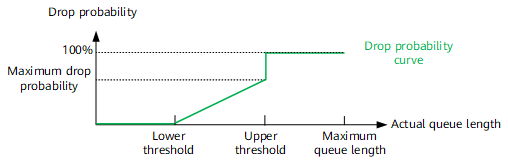

WRED sets lower and upper thresholds for each queue and defines the following rules:

- When the length of a queue is lower than the lower threshold, no packet is dropped.

- When the length of a queue exceeds the upper threshold, all newly arrived packets are tail dropped.

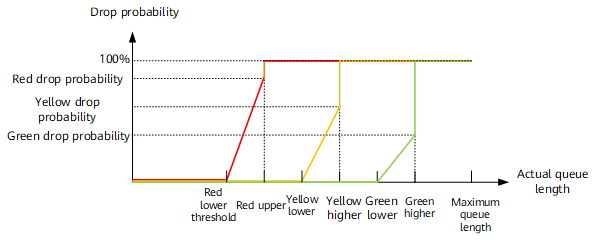

- When the length of a queue ranges from the lower threshold to the upper threshold, newly arrived packets are randomly dropped, but a maximum drop probability is set. The maximum drop probability refers to the drop probability when the queue length reaches the upper threshold. Figure 1 is a drop probability graph. The longer the queue, the larger the drop probability.

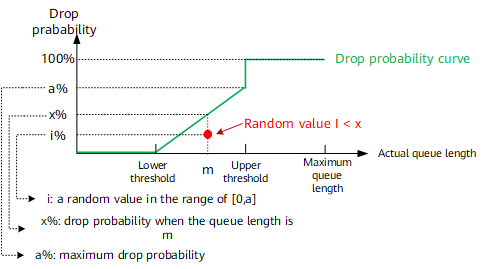

As shown in Figure 2, the maximum drop probability is a%, the length of the current queue is m, and the drop probability of the current queue is x%. WRED delivers a random value i to each arrived packet, (0 < i% < 100%), and compares the random value with the drop probability of the current queue. If the random value i ranges from 0 to x, the newly arrived packet is dropped; if the random value ranges from x to 100%, the newly arrived packet is not dropped.

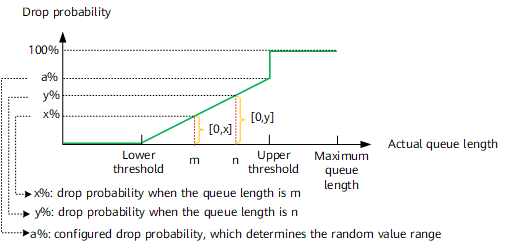

As shown in Figure 3, the drop probability of the queue with the length m (lower threshold < m < upper threshold) is x%. If the random value ranges from 0 to x, the newly arrived packet is dropped. The drop probability of the queue with the length n (m < n < upper threshold) is y%. If the random value ranges from 0 to y, the newly arrived packet is dropped. The range of 0 to y is wider than the range of 0 to x. There is a higher probability that the random value falls into the range of 0 to y. Therefore, the longer the queue, the higher the drop probability.

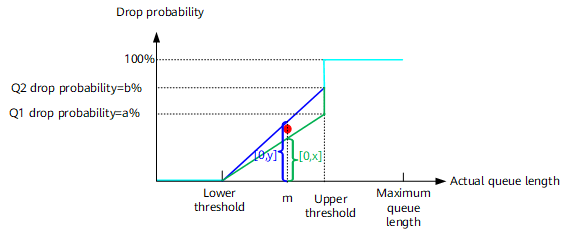

As shown in Figure 4, the maximum drop probabilities of two queues Q1 and Q2 are a% and b%, respectively. When the length of Q1 and Q2 is m, the drop probabilities of Q1 and Q2 are respectively x% and y%. If the random value ranges from 0 to x, the newly arrived packet in Q1 is dropped, If the random value ranges from 0 to y, the newly arrived packet in Q2 is dropped. The range of 0 to y is wider than the range of 0 to x. There is a higher probability that the random value falls into the range of 0 to y. Therefore, When the queue lengths are the same, the higher the maximum drop probability, the higher the drop probability.

You can configure WRED for each flow queue (FQ) and class queue (CQ) on Huawei routers. WRED allows the configuration of lower and upper thresholds and drop probability for each drop precedence. Therefore, WRED can allocate different drop probabilities to service flows or even packets with different drop precedences in a service flow.

Drop Policy Selection

Tail drop applies to PQ queues for services that have high requirements for real-time performance. Tail drop drops packets only when the queue overflows. In addition, PQ queues preempt bandwidths of other queues. Therefore, when traffic congestion occurs, highest bandwidths can be provided for real-time services.

WRED applies to WFQ queues. WFQ queues share bandwidth based on the weight and are prone to traffic congestion. Using WRED for WFQ queues effectively resolves TCP global synchronization when traffic congestion occurs.

WRED Lower and Upper Thresholds and Drop Probability Configuration

In actual applications, the WRED lower threshold is recommended to start from 50% and change with the drop precedence. As shown in Figure 5, a lowest drop probability and highest lower and upper thresholds are recommended for green packets; a medium drop probability and medium lower and upper thresholds are recommended for yellow packets; a highest drop probability and lowest lower and upper thresholds are recommended for red packets. When traffic congestion intensifies, red packets are first dropped due to low lower threshold and high drop probability. As the queue length increases, the device drops green packets at last. If the queue length reaches the upper threshold for red/yellow/green packets, red/yellow/green packets respectively start to be tail dropped.

Maximum Queue Length Configuration

The maximum queue length can be set on Huawei routers. As Queues and Congestion Management describes, when traffic congestion occurs, packets accumulate in the buffer and are delayed. The delay is determined by the queue buffer size and the output bandwidth allocated to a queue. When the output bandwidths are the same, the shorter the queue, the lower the delay.

The queue length cannot be set too small. If the length of a queue is too small, the buffer is not enough even if the traffic rate is low. As a result, packet loss occurs. The shorter the queue, the less the tolerance of burst traffic.

The queue length cannot be set too large. If the length of a queue is too large, the delay increases along with it. Especially when a TCP connection is set up, one end sends a packet to the peer end and waits for a response. If no response is received within the timer timeout period, the TCP sender retransmits the packet. If a packet is buffered for a long time, the packet has no difference with the dropped ones.

Setting the queue length to 10 ms x output queue bandwidth is recommended for high-priority queues (CS7, CS6, and EF); setting the queue length to 100 ms x output queue bandwidth is recommended for low-priority queues.